“Technology is Neither Good, Nor Bad; Nor is it Neutral:” The Case of Algorithmic Biasing

This post is one of two in a series about algorithmic biasing.

In his 1985 address as president of the Society for the History of Technology (SHOT), Melvin Kranzberg outlined “Kranzberg’s Laws,” a series of six truisms about the role of technology in society. Kranzberg’s First Law states: “Technology is neither good nor bad; nor is it neutral.”[1] The first clause of this proclamation challenges the tendency towards technological determinism, and Kranzberg rejected his colleagues’ reductionist assumption that technology determines cultural values and societal outcomes. The second clause acknowledges that technology can propagate disparate outcomes. When combined, the two clauses suggest that machines and programs are simply as impartial as the humans who create them.

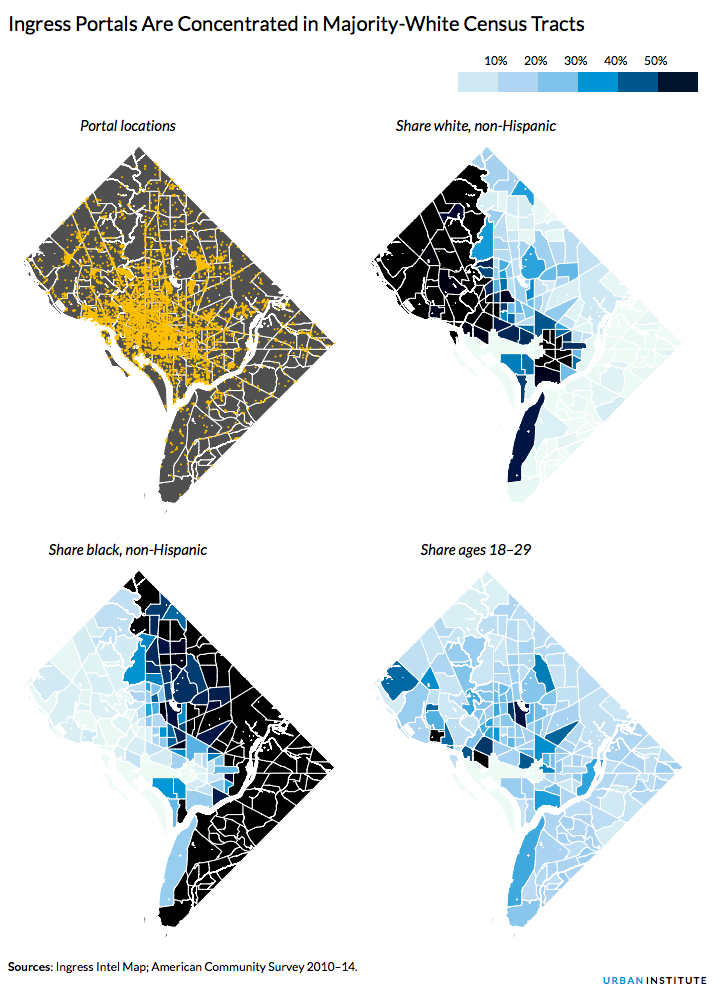

Now, 30 years after Kranzberg’s address, technology commentators and social theorists are rediscovering Kranzberg’s First Law, particularly as it relates to the problem of algorithmic bias (a phenomenon that occurs when an algorithm reflects the biases of its creator). The Urban Institute recently released a report accusing the augmented-reality game Pokémon Go of inadvertent “Pokéstop redlining.”[2] Because the algorithm that the game uses to locate Pokéstops draws from a crowdsourced database of historical markers that are contributed disproportionately by young, white males, Pokéstops are overwhelmingly concentrated in majority-white neighborhoods.

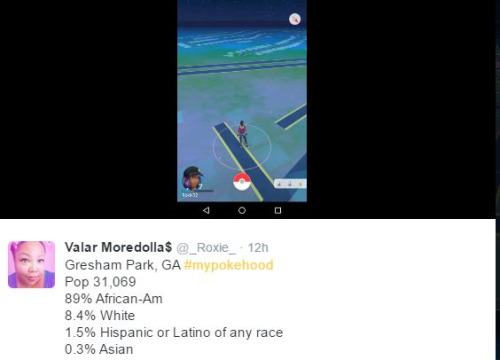

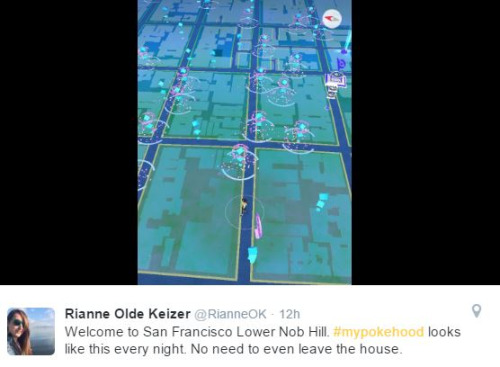

Pokémon Go players living in majority-black neighborhoods took to Twitter to highlight this disparity:

|

|

|

|

| Source: Culturalpulse.org | |

Pokémon’s Go’s virtual place-making algorithm is just one example of purported algorithmic bias. For example, criminal justice scholars have begun to evaluate risk assessment scores, which algorithmically forecast defendants’ probability of repeat offense with increasing scrutiny. While states now allow judges to factor risk assessment scores into their sentencing decisions, there is a debate about whether or not the algorithms that generate these scores decrease or increase racial disparities in sentencing, compared to judges’ subjective decision-making. Arecent ProPublica report suggested that black defendants were 77 percent more likely to be assigned higher risk scores than white defendants by the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) algorithm, one of the most widely used risk assessment algorithms on the market, even after controlling for prior crimes, future recidivism, age, and gender.[3] A follow-up Brookings commentary, however, rejects ProPublica’s conclusions on methodological grounds, indicating the necessity for further discussion.[4]

Proponents of algorithmic risk assessments argue that they serve as a useful tool to combat the implicit biases that factor into judges’ subjective decision-making. But that claim hold true only if the algorithms underlying risk assessment scores are not racially biased. If these algorithms are indeed biased against black defendants, the use of risk assessment scores in sentencing decisions could amplify racial disparities in sentencing black and white defendants.

[1] Melvin Kranzberg, “Technology and History: Kranzberg’s Laws,” Technology and Culture 27.3 (1986): 547.

[2] Shiva Kooragayala and Tanaya Srini,” Pokémon GO is changing how cities use public space, but could it be more inclusive?,” Urban.org, August 2, 2016,http://www.urban.org/urban-wire/pokemon-go-changing-how-cities-use-public-space-could-it-be-more-inclusive.

[3] Jeff Larson et al., “How We Analyzed the COMPAS Recidivism Algorithm,” ProPublica.org, May 23, 2006, https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm.

[4] Jennifer L. Doleac and Megan Stevenson, “Are Criminal Risk Assessment Scores Racist?,” Brookings.edu, August 22, 2016,https://www.brookings.edu/blog/up-front/2016/08/22/are-criminal-risk-assessment-scores-racist/.